Is your organization’s IT strategy dictating cloud migration? Does your organization have hardware or software components that are running out of maintenance or lifetime? Whether you need to get new hardware or replace software components, either scenario would require substantial investment.

Perhaps, you are no longer happy with the licensing or pricing of your legacy platform; or have developed a focus on hyperscalers such as Microsoft Azure and Amazon AWS; or simply rethinking your data and AI approach that makes outdated legacy concepts obsolete.

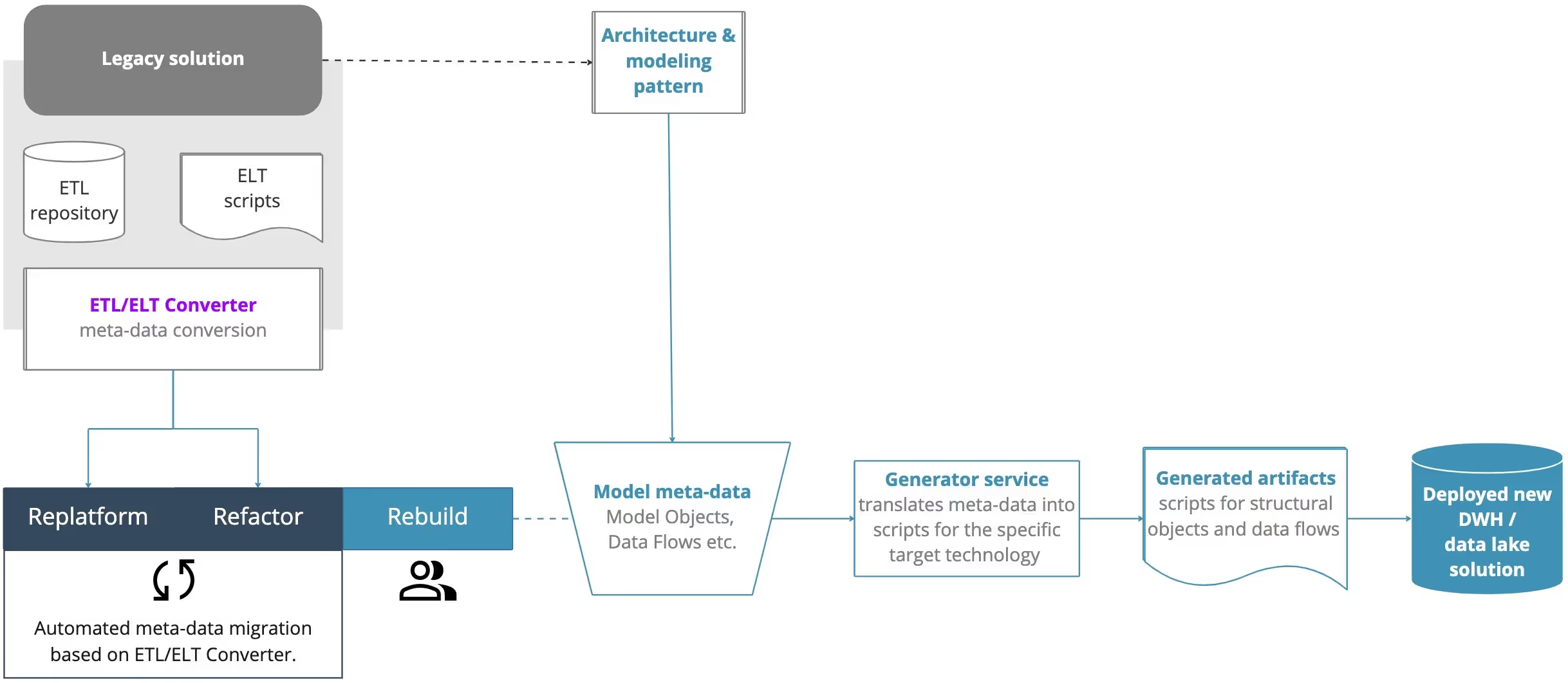

Data warehouse cloud migration strategies

Lift and shift

This means moving your legacy data warehouse to a cloud environment (IaaS) without redesigning or making many changes in the architecture, database, and data store.

However, this would only make sense if you must move on from your on-premise infrastructure quickly, or if your current cloud service works the same as your legacy platform.

Replatforming and refactoring

While replatforming may require changes to the codebases and data store structures, depending on the compatibility of your legacy systems to the intended new cloud-based target system, refactoring takes replatforming to the next level, in which the application is optimized, more standardized, and using more cloud-native features.

Replatforming and refactoring would make sense if your legacy solution is worth migrating, this could be due to many factors, including:

- You have many mappings containing complex transformations that are still valid and valuable.

- The architecture is still suitable, and sufficiently standardized to remain scalable and maintainable.

- The new target platform supports all functionalities used in your legacy solution.

Rebuilding, or building anew

When transitioning to the cloud, many organizations would take the opportunity to discontinue existing legacy systems structures and build their cloud native solutions from scratch.

This would make sense if the structure of your legacy solution no longer meets the requirements and/or contains very few patterns and standardization components.

In reality, most use cases consist of a combination of the replatforming, refactoring, and rebuilding strategies.

Process of replatforming, refactoring, rebuilding your migration

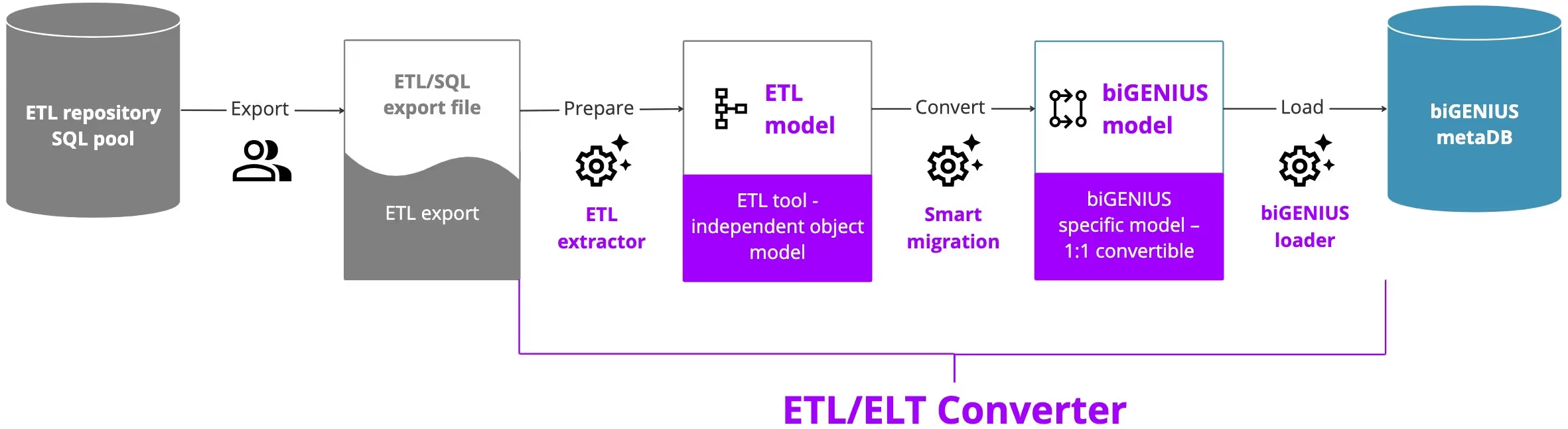

Tasks and coverage of the ETL/ELT Converter

The goal here is to migrate data from the metadata repository of your source tool e.g. Oracle Warehouse Builder to the target tool’s metadata repository, in this case – biGENIUS.

To do this, you first need to export the metadata that describes your ETL processes and objects into an export file, then the biGENIUS Extractor would prepare and extract the data into an independent and lightweight ETL model that can handle the typical definitions of ETL tools, which the biGENIUS Smart Converter would transform into the target ETL model that would then be loaded into the biGENIUS metadata repository. You can then create all the data warehouse objects and processes that have already been defined in the source tool.

Steps of the automated ETL migration process

- Metadata migration

- Code generation

- Deploy and run

- Compare and test

- Final migration load

Conclusion

Migrating any legacy data warehouse solution is a challenging task, but moving from an old system with increasing limitations to an up-to-date and scalable cloud service sooner rather than later can be a constructive decision that can help eliminating issues down the line.

No matter what your use case is, if you are considering a low-cost, efficient, hassle-free migration, it would also be a perfect opportunity to utilize smart data automation for the long-term benefits of your analytical data ecosystem by minimizing manual work, human error, and ultimately, resources.