Introduction

While centralized data warehouses provide control and consistency, they create bottlenecks that limit organizational agility. As the demand for real-time insights grows, many organizations are moving toward more agile, decentralized models like data mesh and data fabric. This evolution empowers domain teams to take ownership of their data products but introduces new challenges:

- Increased need for governance across distributed teams

- Higher demand for standardization and enablement

- Greater infrastructure complexity

- More frequent content overlap and maintenance overhead

At the recent Big Data & AI World Frankfurt conference, biGENIUS CTO Thomas Gassmann and Visium (Swiss AI & Data consultancy) Senior Consultant Vincent Diallo-Nort shared insights from a real-world data mesh implementation that successfully navigated this challenge.

From centralized control to distributed ownership

Traditional enterprise data architectures operated on a hub-and-spoke model where specialized teams controlled access to centralized data warehouses. This approach ensured data consistency and security but created significant bottlenecks for business units requiring rapid access to insights.

The shift toward data democratization introduced self-service business intelligence and data lakes, expanding access but often at the cost of governance and quality. This led to the emergence of data mesh architecture: a distributed approach that treats data as products with defined ownership, governance frameworks, and service-level agreements.

However, implementing data mesh at enterprise scale introduces operational complexities that require systematic solutions. To succeed, Chief Data Officers must enable domain teams to build products autonomously while maintaining enterprise standards for quality and governance.

Enterprise implementation: multi-domain data products

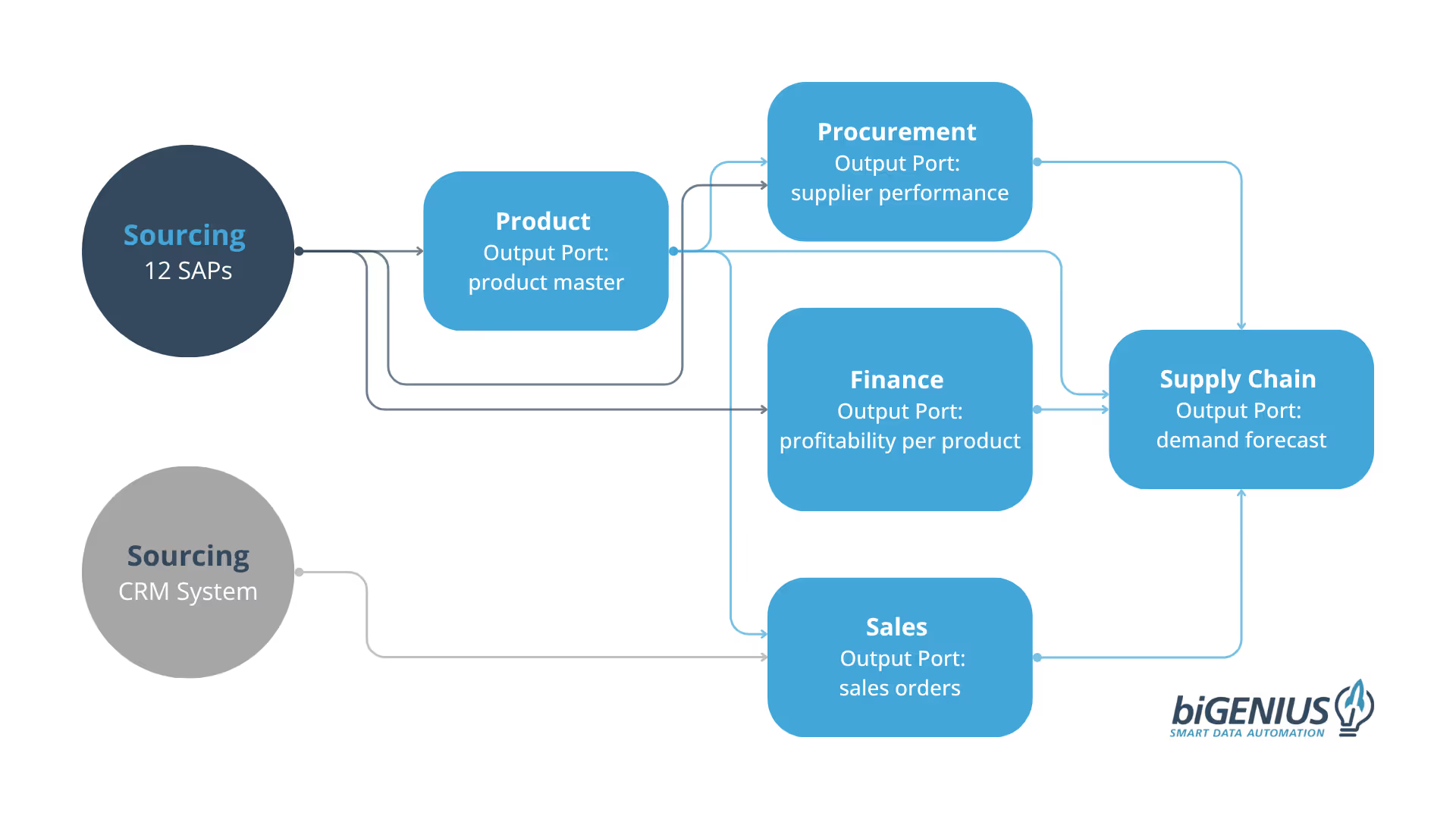

Our recent engagement involved an enterprise client with a hub-and-spoke organizational structure requiring independent team operations across multiple domains. The implementation encompassed data products built on Databricks with stringent compliance, code quality, and modeling requirements.

The project scope included:

- Sourcing (integrating 12 SAP systems and CRM platforms)

- Procurement (supplier performance analytics)

- Finance (product profitability analysis)

- Supply Chain (demand forecasting)

- Sales (order management and analytics)

Each data product required distinct output specifications while maintaining consistency in governance and technical implementation standards.

Systematic pattern implementation

The implementation succeeded through establishing repeatable patterns that could scale across the organization:

Incremental scaling approach: Rather than implementing a comprehensive data mesh immediately, we began with targeted use case development. This allowed validation and refinement of patterns before broader organizational deployment.

Template standardization: Standardized development templates ensured consistency across distributed teams while enabling autonomous operation within established frameworks.

Metadata governance: Consistent metadata management became the integration foundation, enabling data product discovery and governance across organizational boundaries.

Platform flexibility: Architecture patterns supported modern platforms like Databricks while maintaining adaptability for evolving technology requirements.

Comprehensive automation: Process automation extended from development through deployment, maintaining consistency and velocity as the data product portfolio expanded.

The Role of biGENIUS-X

biGENIUS-X played a central role in the project by automating core aspects of the data lifecycle. Its model-driven platform enabled the generation of:

- Native code tailored to the target environment

- Standardized documentation

- Rich, consistent metadata

The integrated Data Marketplace further amplified value by:

- Enabling discovery and reuse of data products

- Clarifying ownership and access

- Supporting federated teams with shared templates

- Streamlining publishing and governance

This combination of automation and standardization allowed the organization to scale its data mesh approach confidently.

Key Takeaways

Data products are not static assets, but dynamic, evolving services that must remain responsive to business change and stakeholder needs. This shift requires organizations to rethink their approach to data delivery, from both a technological and operational perspective.

- Start small, scale smart: Begin with focused use cases, but lay the groundwork for scalability from the outset by applying repeatable patterns.

- Prioritize outcomes: Delivering fast, meaningful results builds momentum and drives adoption across the organization.

- Standardize through metadata: A consistent metadata strategy ensures interoperability, discoverability, and governance across decentralized teams.

- Automate to enable growth: Automation is not just about efficiency, it also makes data products sustainable and future-ready.

With biGENIUS-X, data teams move beyond managing fragmented pipelines. They enable a cohesive, governed, and responsive data ecosystem where data products are discoverable, trusted, and built for continuous improvement.

Presentation video

You can watch the full presentation of How Data Automation Brings Data Products to Life here, courtesy of Visium: