Data quality plays a central role in modern data management, impacting business outcomes, operational reliability, and confidence in insights. To meet these requirements, biGENIUS-X introduces a redesigned data quality framework expanding from limited, fixed checks to a comprehensive, configurable system.

Framework redesign: configurable and scalable

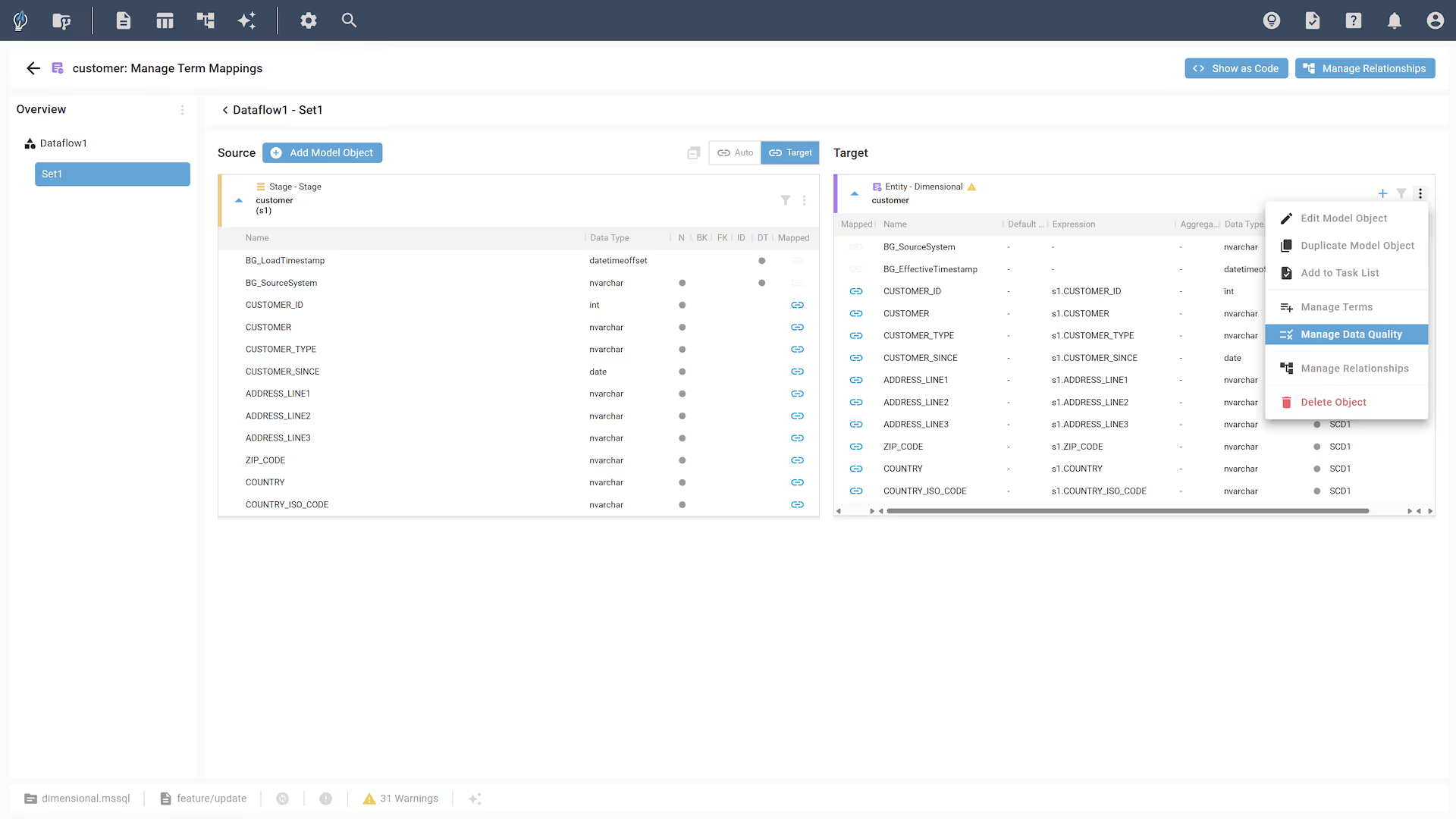

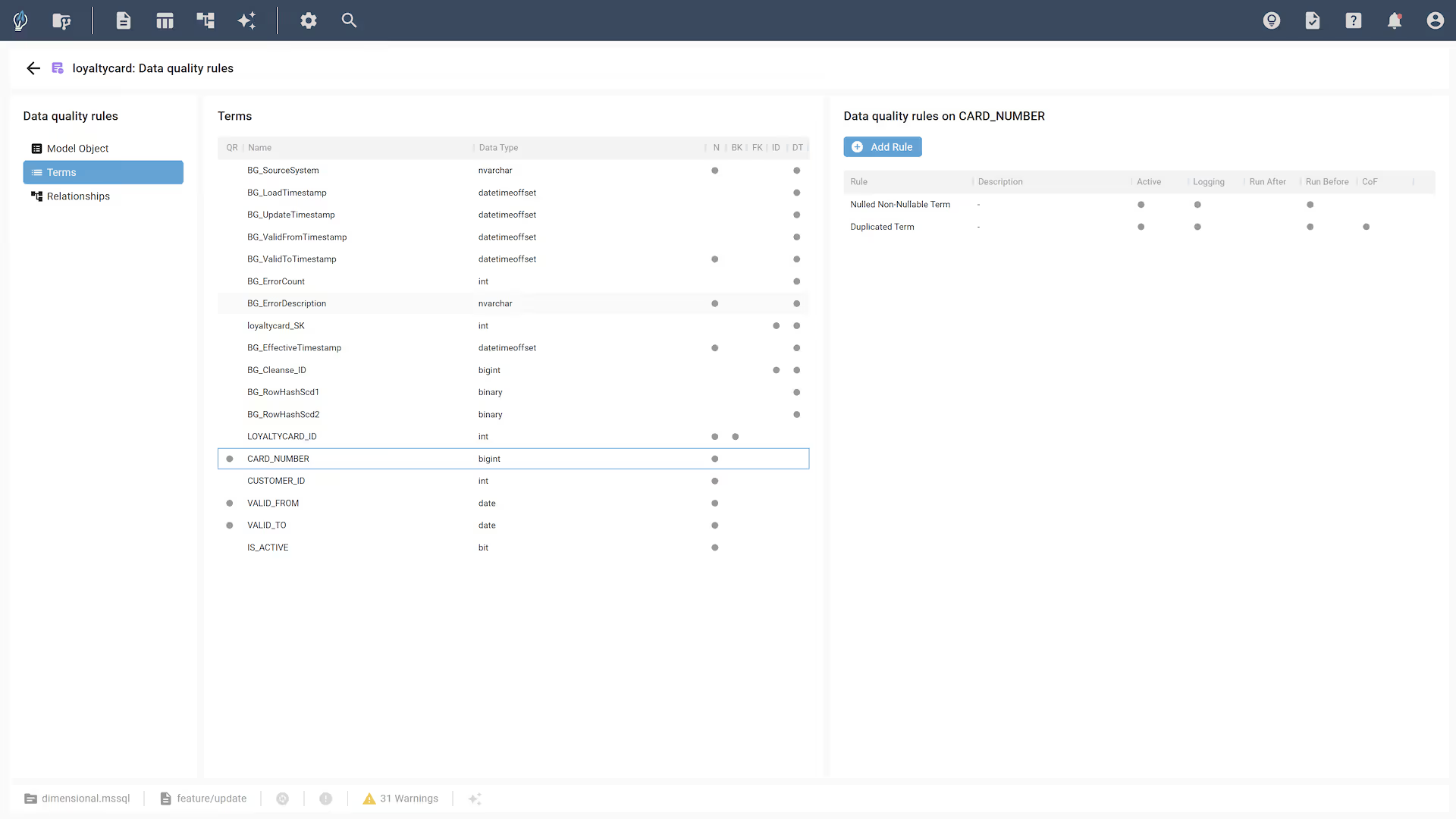

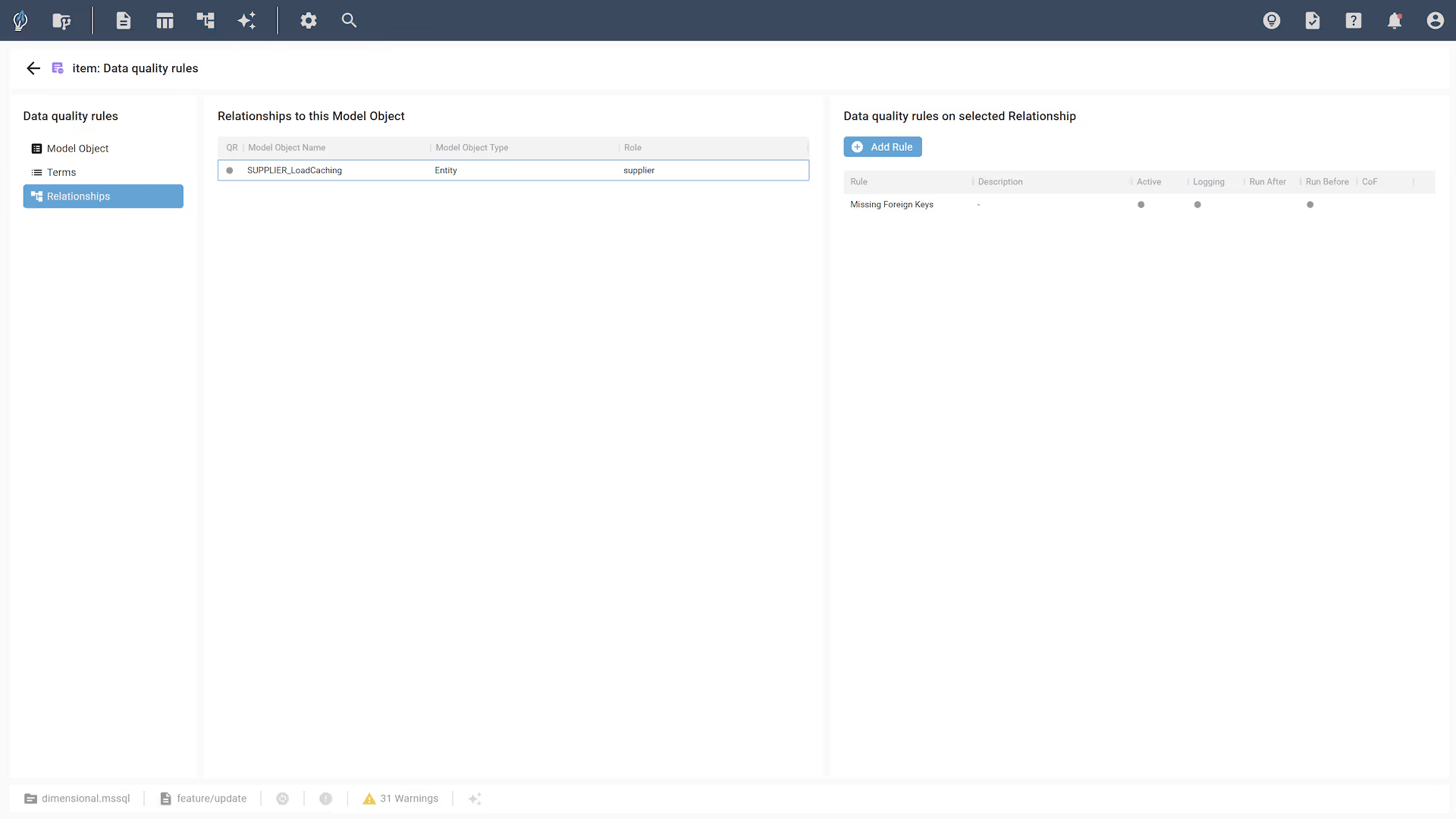

Previously, data quality checks in biGENIUS-X were limited to a fixed set of cleansing actions available in Dimensional Generators that were neither configurable nor customizable. The redesigned framework changes that, and here is how users can easily access and manage their data quality rules in their data modeling process:

The new system provides users with more flexibility in how they set up their data quality checks, while enabling organizations to embed quality control into their workflows with a level of precision and scalability previously unavailable:

- Modify, disable, or remove default validation rules

- Define custom rules per model object, term, or relationship

- Control rule impact and execution timing based on organizational requirements

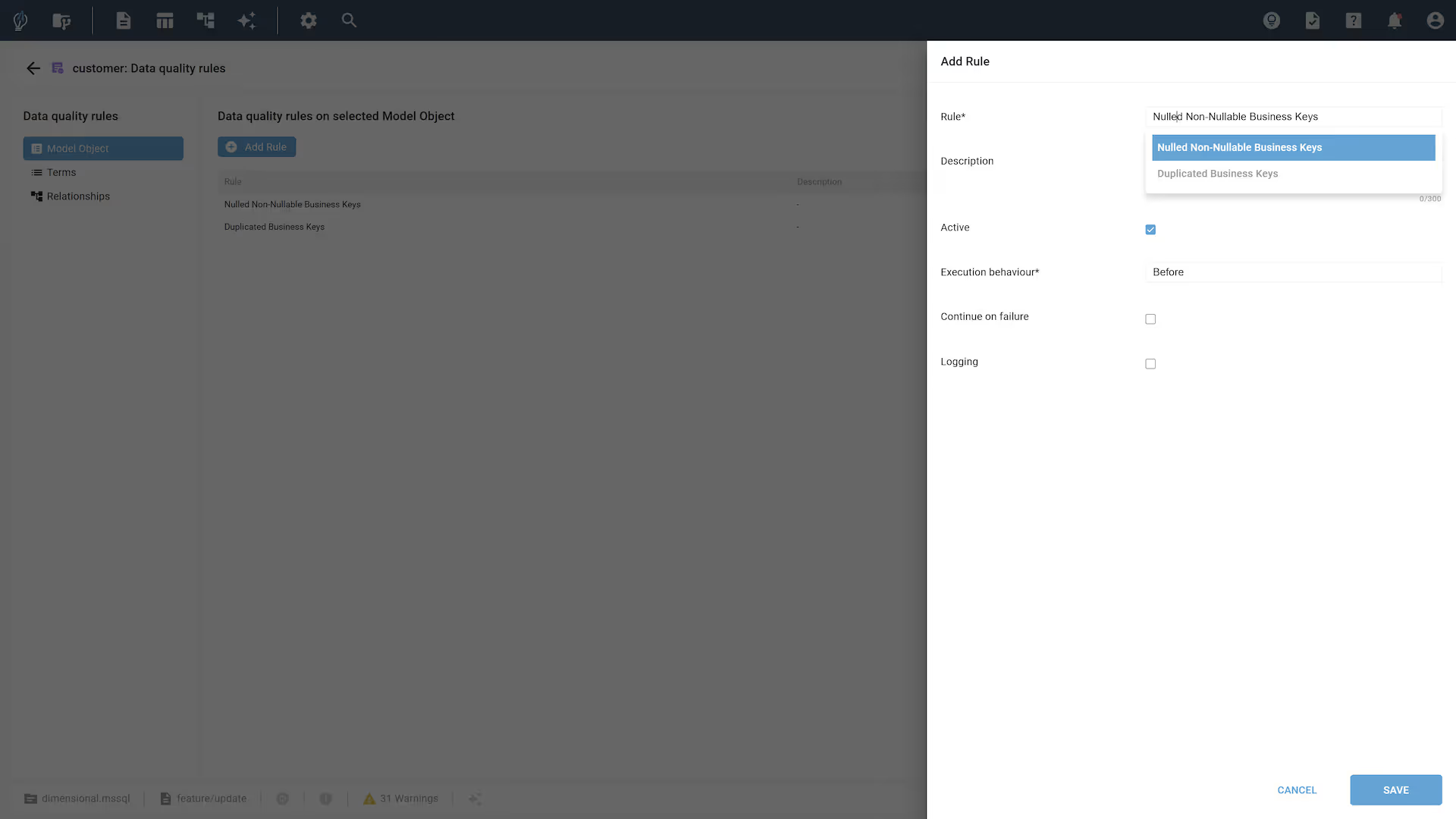

Rule scope and validation types

Validation logic is now organized across three primary scopes. Multiple validation rules can be defined per scope, enabling fine-grained control over each layer of the model. Each rule can be:

- Individually adjusted to meet project-specific conditions

- Deactivated or removed if not applicable

- Supplemented with additional custom rules as needed

Model object-level validation

This level supports NOT NULL checks for business keys, uniqueness enforcement to identify duplicated business keys, and historization validation to flag outdated versions during load into slowly changing dimensions. These rules protect against corrupted keys and temporal inconsistencies in analytical outputs.

Term-level validation

Rules at the term level focus on the integrity of individual attribute values across records. They detect null values where completeness is expected and highlight duplicate values that may indicate anomalies or data entry issues.

Relationship-level validation

Relationship validation verifies referential integrity between linked model objects. Failures here can result in orphaned records and incomplete joins, directly impacting downstream transformations and reporting.

Additional rule types are planned for future releases.

Load behavior and error handling

biGENIUS-X now provides granular control over how data quality issues affect the loading process:

- For critical issues such as duplicate business keys, the load can be configured to abort automatically.

- For less critical issues, such as missing foreign keys, the load can proceed, with exceptions logged for further analysis.

All issues are captured in the Data Quality Error Table and made accessible through the Data Quality Result View, ensuring traceability and enabling timely remediation.

Logging and diagnostics

By default, all rule violations are logged together with:

- A detailed, human-readable error description

- The specific data that triggered the rule

To avoid excessive logging for frequently occurring non-critical errors, users may choose to disable logging for selected rules.

Execution timing options

Data quality rules are executed prior to data loading by default, allowing any invalid records to be handled before integration. An upcoming release will extend this functionality to support post-load validation, ensuring all data is ingested while enabling deferred error handling and resolution.

Conclusion and roadmap

biGENIUS-X’s enhanced data quality framework marks a shift from rigid cleansing logic to an extensible, rule-based architecture. It introduces multi-scope validation, configurable responses, flexible execution timing, and comprehensive logging.

Looking ahead, biGENIUS-X will continue to expand its data quality capabilities with:

- Support for Operational Data Store (ODS) and data vault Generators

- Post-load validation execution

- Additional rule types

These improvements reflect biGENIUS-X's commitment to evolving with the needs of modern data teams. User feedback will continue to guide future development.