Modern data architectures promise faster insights, better decisions, more value from information assets. Yet despite substantial investments in cutting-edge tools and infrastructure, poor data quality remains one of the most persistent obstacles to reliable insights, often because foundational data quality measures are missing or misaligned.

Data quality must be an integral part of how data pipelines are created, validated, and maintained. This includes defining relevant quality checks that align with business needs and enforcing them as part of the code that runs those pipelines.

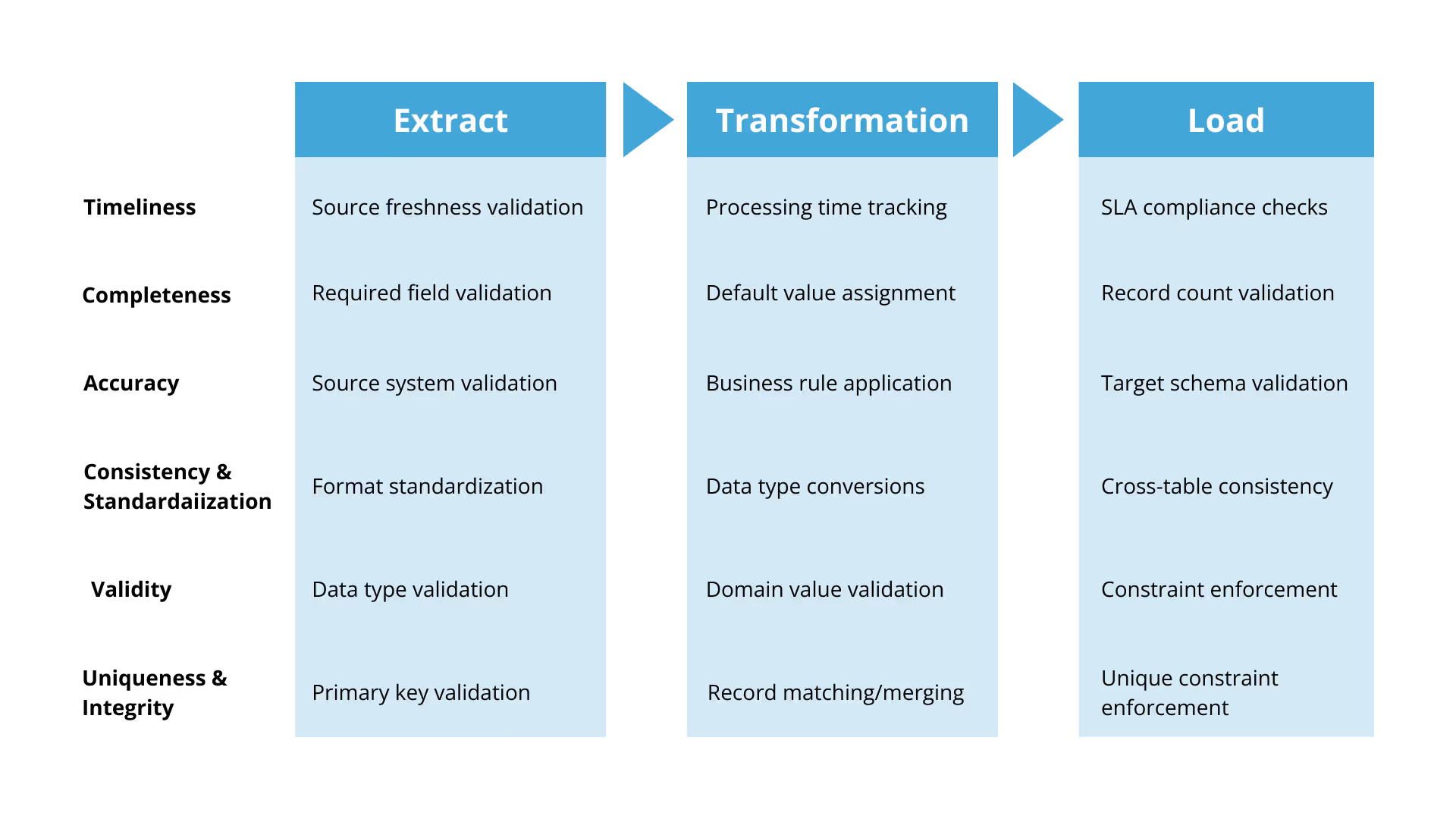

The six dimensions of data quality

A robust quality strategy simultaneously addresses multiple dimensions of fitness for use:

Accuracy

Data must reflect the real-world entities or events it represents. This includes schema validation, source reconciliation, and applying business rules to computed fields.

Completeness

Complete datasets are essential for downstream processes and decision-making. Missing customer IDs or null transaction dates can invalidate entire analyses or introduce systematic bias.

Consistency

Consistency ensures that data has no contradictions or conflicting values. This means uniform data types and formats, maintained referential integrity, and reconciliation between related datasets.

Timeliness

Data must arrive according to business needs. Real-time fraud detection requires different freshness standards than monthly reporting—both need monitoring and validation.

Validity

Valid data conforms to expected formats, standards, or domain rules. Ensuring data formats are correct and enforcing logical and domain-specific constraints throughout transformation and loading helps preserve validity.

Uniqueness

Duplicated values distort reporting and analysis. Uniqueness ensures each entity is represented once and redundancies are removed.

This table illustrates how each data quality dimension is addressed during the Extract, Transformation, and Load stages:

Common pitfalls in data quality management

Even with awareness of these dimensions, organizations often fall into common patterns that compromise data quality:

Delayed validation: If checks occur only after loading, tracing issues back to their source becomes time-consuming and costly.

Generic rules: Without tailoring validation to business-specific use cases, critical context can be missed.

Manual enforcement: Requiring manual effort to review quality increases the risk of inconsistency and human error.

These patterns not only slow development cycles but compromise reliability as data volumes grow.

Ensuring data quality by design

Data quality methods

Effective data quality management relies on a comprehensive toolkit of automated techniques that can be applied across the ETL pipeline:

Automated anomaly detection: Use rule-based or machine-learning algorithms to flag unusual or suspect records early during extraction or transformation. Track data distributions and flag deviations from expected patterns.

Deduplication: Detect and remove duplicate records using unique identifiers. Merge duplicate entities across different source systems during transformation.

Validation checks: Ensure data type match, and accuracy is preserved during transformations. Enforce common formats (e.g. dates, email formats, ID structures), range and pattern validations, and referential integrity.

Standardization: Convert values into consistent formats, such as standardized addresses or date formats during transformation. Align business keys of data from different sources and implement automated validation of predefined data contracts.

Error correction rules: Automatically fix common data issues using predefined rules (e.g. correcting typos, filling default values). Apply business-specific rules and handle late arriving data to fill gaps in previously loaded data.

Data enrichment: Automatically augment missing or incomplete data fields using external reference sources or lookup tables. Calculate new features from existing data (e.g., customer lifetime value) and interpolate missing data points.

Load-time controls: Reconcile record counts between source and target, accounting for legitimate filtering. Apply automatic rollbacks, aborts or alerts when serious quality breaches are detected.

Continuous monitoring and reporting: Track quality metrics like percentage of nulls, duplicates, or invalid records over time through dashboards and alerts. Monitor processing times, track batch completion and identify delays in data availability to ensure data availability within SLA requirements.

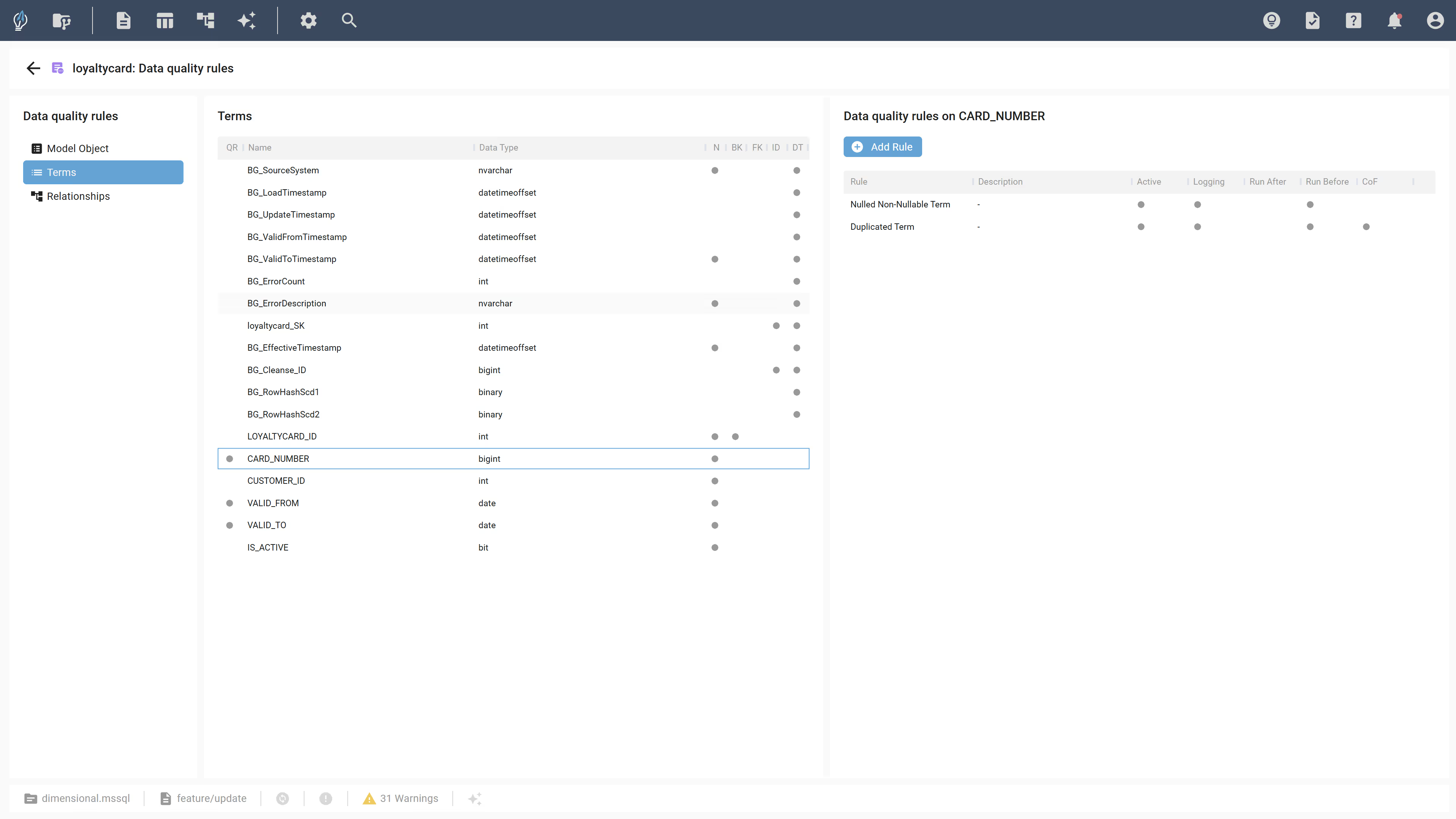

Automation as a foundation for scale

Effective quality enforcement requires systematic automation throughout the data lifecycle. In the latest version of biGENIUS-X generators, users can manually configure data quality rules for any model object, relationship, and term during their data modeling process in biGENIUS-X. This gives data teams control over which checks are applied, while tailoring these rules to specific datasets, business logics, and project needs.